Introduction

Chatbots are becoming a common presence in out daily lives. The technology behind chatbots is maturing rapidly, and is important to have the right tools to facilitate the deployment of chatbots in our projects.

A chatbot is an application that has written or spoken language as its user interface. This means that a conversation is the means through which questions are answered, requests are serviced, etc.

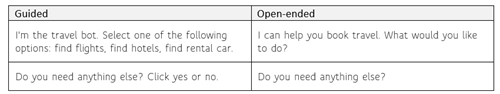

Bots can use various conversational styles, from structured and guided to free-form and open-ended. Based on user input, bots need to decide whats the next step in its conversation flow. Azure AI services includes several features to simplify this task. These features can help a bot search for information, ask questions, or interpret the user's intent.

The interaction between users and bots is often free-form, and bots need to understand language naturally and contextually. In an open-ended conversation, there can be a wide range of user replies, and bots can provide more or less structure or guidance. The following table illustrates the difference between guided and open-ended questions.

In this article we will see how can we incorporate an azure chatbot that uses Conversational Language Unserstanding (CLU) in a web app, using Azure Cognitive Services. The chatbot will be able to answer questions using natural language, and we will see how we can train the bot to suit our needs.

Language Unserstanding

Natural language understanding (NLU) is a branch of natural-language processing in artificial intelligence that deals with machine reading comprehension. It uses computer software to understand input in the form of sentences using text or speech. NLU enables human-computer interaction by analysing language and not just words.

Using Azure Cognitive Services Natural language understanding features we will build custom natural language understanding models to predict the overall intention of user's message and extract important information from it.

Conversational Language Understanding (CLU)

Conversational language understanding (CLU) enables developers to build custom natural language understanding models to predict the overall intention of an incoming utterance and extract important information from it. CLU only provides the intelligence to understand the input text for the client application and doesn't perform any actions on its own.

To use CLU in our bot, we will create a language resource and a conversation project, train and deploy the language model, and then implement in our bot a telemetry recognizer that forwards requests to the CLU API.

Questions and answers

Question-and-answer features of the Azure Cognitive Services will let us build knowledge bases to answer user questions. Knowledge bases represent semi-structured content, such as that found in FAQs, manuals, and documents.

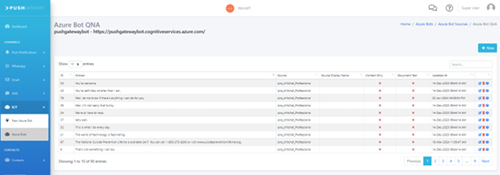

In our demonstration we will incorporate the management of this knowledge base in our web app, allowing us to dynamically make our bot smarter, and answers the questions that we want.

Question answering

Question answering provides cloud-based natural language processing (NLP) that allows us to create a natural conversational layer over our data. It's used to find the most appropriate answer for any input from our custom knowledge base of information.

We will user Azure Cognitive Services to create such layer, and make our bot understand the users questions.

In the implementation, we start by the necessary Azure Services that will allows us to create our bot.

Azure Language resource

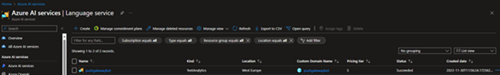

First, we will create a Language Service in the Azure portal:

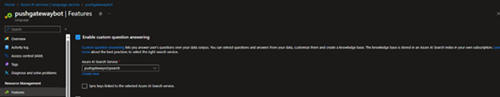

In order to use the custom question answering, we will have to create an Azure AI Search Service first:

An then we can enable it in the Azure language resource pushgatewaybot:

Azure AI Bot Service

We will create a new Azure AI Bot Service, in our case called PushGatewayBot:

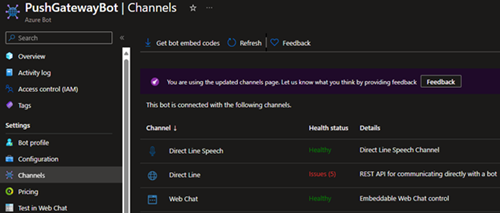

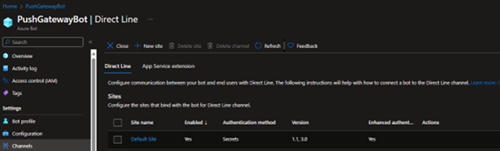

In the channels section will add the following:

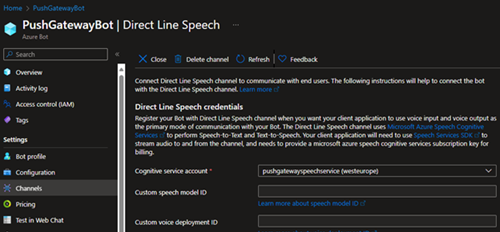

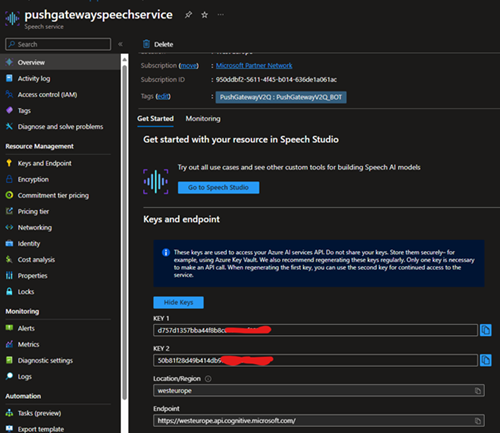

The Direct Line speech will allow us to add speech recognition to our bot. To configure it we will need to create a Speech Service, in this case named pushgatewayspeechservice.

And then we can add it in our Azure AI Bot Service, by editing the Direct Line Speech channel:

Direct Line will allow our web app to communicate with the bot, where we will make a web page for the user interaction with the bot.

By editing the Direct Line channel, we will have the default Site, where we will add Trusted origins according to the configurations of our web app:

Add Language Resource to Language Studio

To create our custom question-answering project, we will use the Azure AI language Studio in https://language.cognitive.azure.com/.

In the Language Studio settings, we will add the Language resource we created in the Azure Portal. We’ll have to include the subscription, resource group and name of the resource accordingly:

Create Custom Answering project

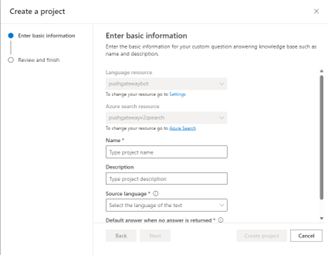

We will start by creating a new Custom Question Answering project. When creating the new project pushgatewaybot, The Language Resource and Azure Search Resource created in the Azure Portal will appear:

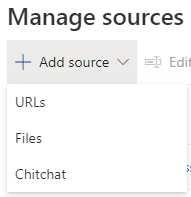

In Manage Sources, we will add a basic source of information called Chitchat, that will make the bot answer basic questions right from the start:

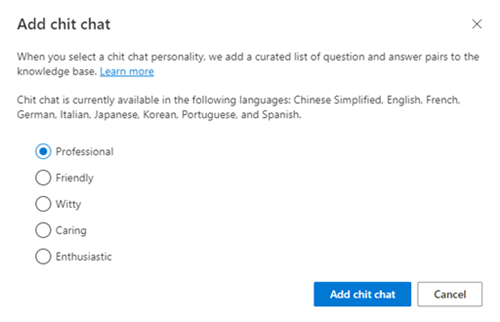

We can choose the type of response the bot will give from this selection:

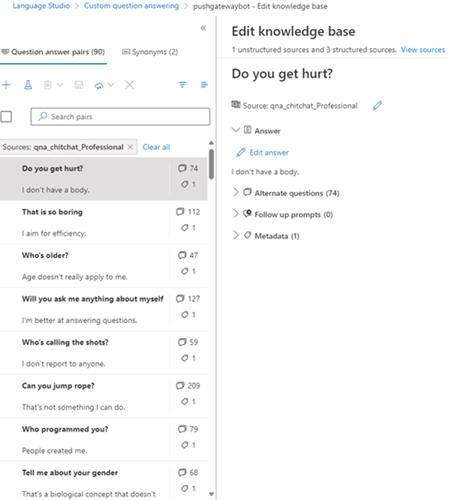

The source will appear in the list and by selecting it we can see the question-answer pairs that were created by default:

We also can add our own question/answers by creating our own source. Sources can be added in the Language Studio from URLs or Files. Later we will see how in our web app PushGateway how we can add answer/question pairs to our sources.

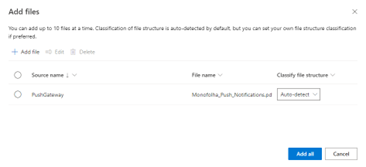

For now, we will add a source from the promotion flyer of PushGateway:

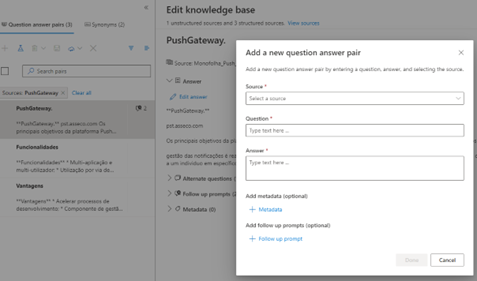

As soon as Language Studio finishes processing, we will have the new source available, with the question/answer pair added automatically:

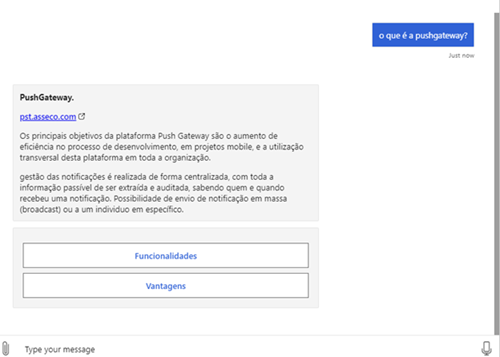

As we can see, Language Studio has added automatically what PushGateway is, its functionalities, and its advantages from the information gathered from the flyer. Notice that the information is structured too because when it added what PushGateway is, it also added Follow up Prompts:

This means that after answering what PushGateway is, the bot will offer the user in a prompt these two question/answer pairs (advantages and functionalities).

In order to add our own question/answer pair, we can edit any source:

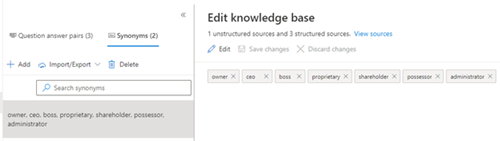

We can also manage a list of synonyms to improve the user interaction:

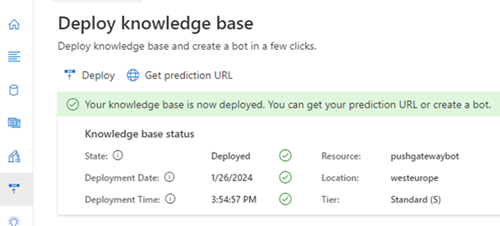

Any modifications to the knowledge base require a deployment:

Create Bot Web App

Next, we will create a Web App that will contain the configurations that will allow to show a chat page to interact with the users.

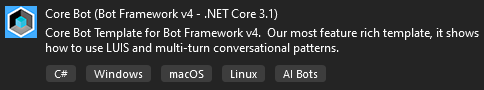

Let’s start by creating an empty Core Bot template in Visual Studio:

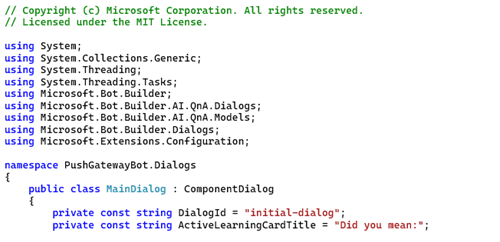

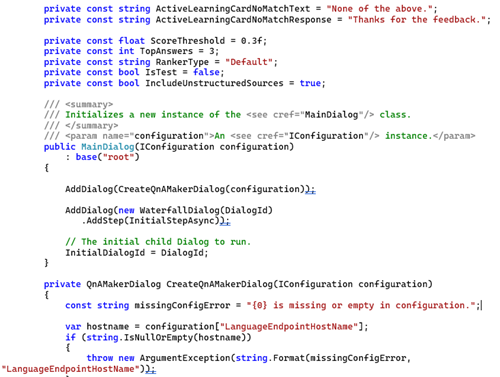

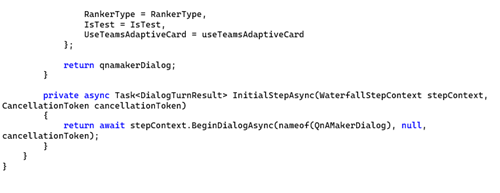

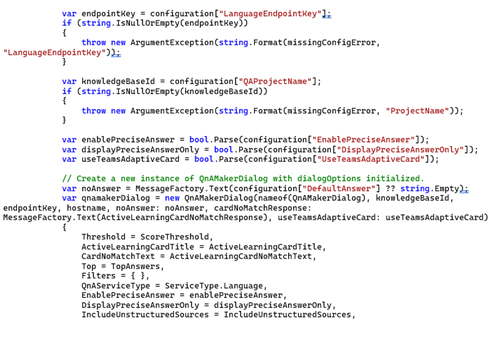

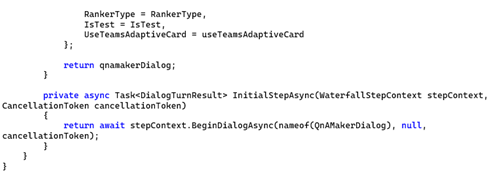

We will modify the /Dialogs/MainDialog.cs file to adapt it to our Custom Question Answering Project:

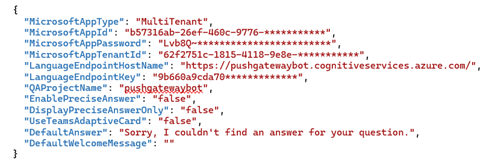

As for the configuration parameters, we will incorporate them in appsettings.json:

This information is gathered from the Azure Portal and Language Studio, and it will allow us to use the resources created earlier.

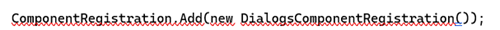

In the Startup.cs file we must add the line:

We are now ready to deploy our bot to a web app, just like any ordinary web app.

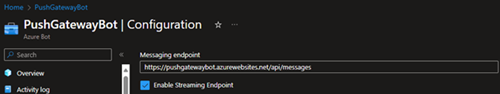

Returning to our PushGatewayBot resource in the Azure portal, we will define the messaging endpoint in order to receive the messages:

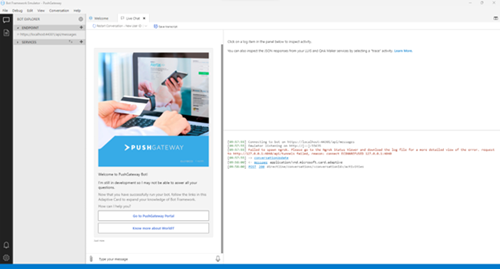

Before applying our bot to a web chat page, we can test it using the Bot Framework Emulator (V4) available at (https://github.com/Microsoft/BotFramework-Emulator/blob/master/README.md).

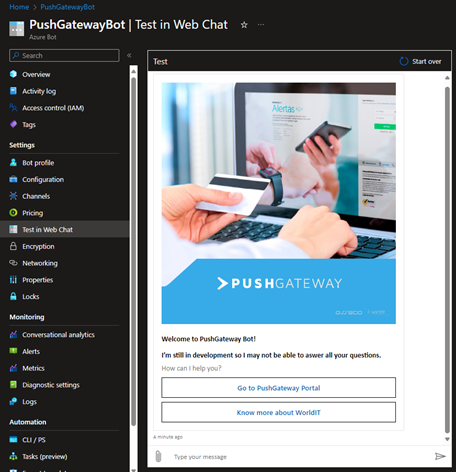

If successfully deployed, we can also test the bot in the Azure Portal:

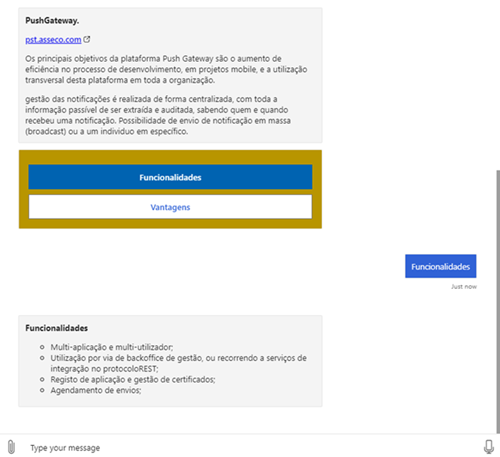

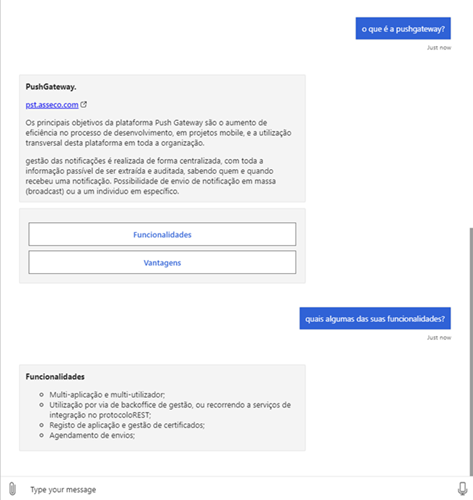

Now, for a brief test to our knowledge base, we will ask a few questions to our bot:

Notice that because our data is structured, when we added the pushgateway flyer, it was automatically added 2 follow up prompts, shown in the above image. The user can click them ad obtain the next question/answer pair:

or he can simply ask:

We can also test the previously added chit chat knowledge base:

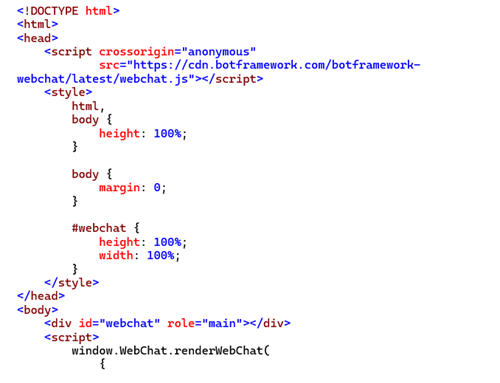

Add Bot to a web page

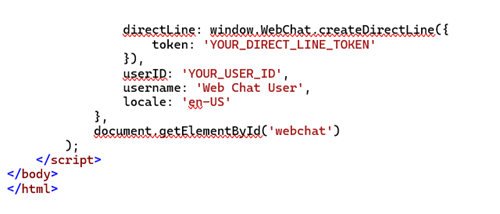

Now in order to user our bot outside the emulator and in our very own web page, we must configure the direct line channel configured previously in the Azure Portal:

To obtain the token, we must call https://directline.botframework.com/v3/directline/tokens/generate the Bearer Secret set in the Azure Portal:

For more information you could check out https://learn.microsoft.com/en-us/azure/bot-service/rest-api/bot-framework-rest-direct-line-3-0-authentication?view=azure-bot-service-4.0.

UserId can be set with any string, accordingly with your project. These should be set server-side for security.

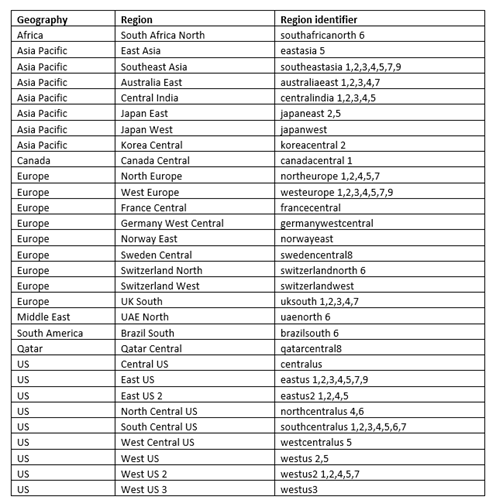

In case we want to use the speech service previously created in the Azure Portal, we must modify the renderWebChat call. First, we need to obtain a token from the speech service, and a region as well. The Azure Speech service is available in multiple regions and each one has unique endpoints. The following table shows the different region identifiers:

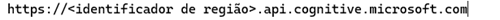

The speech service endpoints will be like so:

In order to obtain the authorization token for the speech service, we must make a POST request to

https://<identificador_de_região>.api.cognitive.microsoft.com/sts/v1.0/issueToken

with a request header "Ocp-Apim-Subscription-Key" containing the value of a key available in the Azure portal, when the speech service was created:

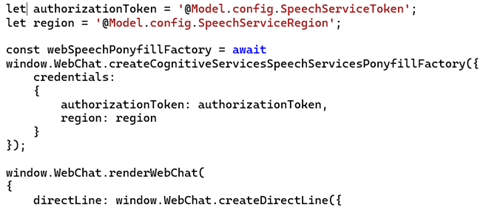

Now to apply it to the web chat page, we need to modify the code like so:

We now have available the speech button in the web chat that will allow the user to use voice commands to interact with the bot:

Question Answering Service API

The Question Answering service is a cloud-based API service that lets you create a conversational question-and-answer layer over your existing data. We can use it to build a knowledge base by extracting questions and answers from our semi-structured content, including FAQ, manuals, and documents. Using the Azure Cognitive Language Services Question Answering client library for .NET, we can use this API to manage our knowledge bases from within our projects, without the need of using the Language Studio, thus providing a better integration with our projects and a better user experience.

For more information on how to use the client go to: https://github.com/Azure/azure-sdk-for-net/tree/Azure.AI.Language.QuestionAnswering_1.1.0/sdk/cognitivelanguage/Azure.AI.Language.QuestionAnswering.

Conclusions

We’ve seen how we could create and integrate a Custom Question Answering Azure Bot Project to any web page. This type of project lets us customize a list of questions and answers extracted from our content corpus to provide a conversational experience that suits our needs. But there are other types of projects that may better suite the requirements of our projects:

- Conversational language understanding allows to build natural language into bots, in order to performs actions, like for example book a flight by accepting natural language input by the user like “book me a flight to Lisbon”;

- Custom text classification: allows to train a classification model to classify text using our own data. It can be used in scenarios like automatic emails or ticket triage in a support center, where high volumes of emails or tickets containing unstructured, freeform text and attachments must be sorted out and delivered to the right department;

- Custom named entity recognition: enables developers to build custom AI models to extract domain-specific entities from unstructured text, such as contracts or financial documents. By creating a Custom NER project, we can iteratively label data, train, evaluate, and improve model performance before making it available for consumption.

- Custom conversation summarization: allows to train a summarization model to create or generate summaries of conversations.

- Custom abstractive summarization: allows to train a summarization model to create or generate summaries of documents.

- Orchestration: allows to connect and orchestrate different types of projects like Conversation language Understanding and Custom question answering.

As you can imagine, this is just the beginning of chatbot development. As chatbots continue to advance in their communication skills with customers, there have been an increscent positive feedback. According to a recent study, 80% of customers had a pleasant experience interacting with bots (https://www.searchenginejournal.com/80-of-consumers-love-chatbots-heres-what-the-data-says-study/295833/).

We will see what the future of chatbots and AI will bring us.

#Chatboats #Azure #PushGateway #Innovation #Technology